White Paper

Executive Summary

Information Technology (IT) professionals who are in the know of cloud computing technologies are familiar with the National Institute of Standards and Technology (NIST) definition of cloud computing and cloud services characteristics. In a nutshell, cloud computing is an abstraction of the underlying infrastructure to provide shared resources that can scale up or down. Whether the cloud is providing IT infrastructure services (Infrastructure as a Service); an application development platform (Platform as a Service); or pay by the seat software application (Software as a Service), it must be compliant and extremely secure for a wide variety of data security categorization requirements

Before organizations can plan for migration to the cloud, they must first perform a cloud suitability assessment, based on business and technical factors, as to which of their on-premise applications belong in the cloud. Once that determination is made, the next phase is selecting a transformation approach for migration to the cloud. In general, application transformation or migration to the cloud is the process of redeploying an application on a newer platform and infrastructure provided by the Cloud Service Providers (CSPs) to derive tangible financial, operational and new scalability and resiliency capabilities.

At a high-level, there are four application transformation approaches to the cloud: re-deploying, re-placing, re-architecting and re-building. Each transformation approach will impact the on- premise application stack at different levels thus each approach presents new challenges and cost implications to obtain all the benefits of cloud services. In addition, Government, Commercial and Non-Profit Organizations are starting to reap the benefits of using a cloud infrastructure to transform their IT support from an existing capital expense (“capex”) to an operating expense (“opex”) model. The opex support model reduces the need for organizations to find and retain trained IT professionals to support this dynamic growth area. It also reduces support costs because CSPs can implement innovative hardware and software solutions quickly at lower costs via their use of a shared resource model.

Application Modernization and Migration Approach

Organizations are forced to constantly reassess their portfolio of applications to determine if they still support the mission and provide business value. If business value is lacking or the mission changed, the application can be retired or modernized to realign or create new business value. Even though a legacy application provides value, the operations and maintenance of legacy application can be time-consuming and a resource-intensive process, thus the recurring support costs outweigh the value it provides. Whether its creating more value from the portfolio while reducing costs (performance engineering), creating a more manageable portfolio (portfolio optimization) or leveraging new technologies such as cloud computing (application modernization), organizations must constantly evolve or risk being obsolete.

The path to the cloud is not without risks. a well-defined strategy consisting of: a) assessing an applications readiness for cloud, b) transforming or migrating it to the cloud, c) hosting the application in the cloud and d) continuing to implement performance improvement features migrates the risks and controls costs (see Figure 1.0 below).

Figure 1.0 Cloud Migration Strategy

The biggest risk with the path to the cloud is the transformation or migration of the application into the cloud environment. The application’s performance can negatively be impacted (e.g. failed migration or take application down for an extended period before the cutover), the end-users experience (e.g., increased latency due to added hops to the application/data in the cloud) is degraded and the data security postured is elevated (i.e., a commercially owned and operated infrastructure and shared with other customers). Depending on the performance and business requirements of the application, there are many transformation approaches to choose from. The table below (see Figure 2.0) provides different approaches, examples, benefits and impact to the application stack.

| Transformation Approach | Example | Benefits | Impact to the Application Stack |

|---|---|---|---|

| Re-deploying (IaaS w/ few cloud characteristics) | Migrating legacy in- house physical/virtual servers to virtual machines in the cloud | Leverage more cost-effective computing resources | Platform/Framework – No Change Programming Language – No Change Applications/Dependencies – No Change and/or Update Configuration/Metadata – No Change and/or Update Data Store/Containers – No Change |

| Re-placing (SaaS) | Sun setting in-house application farm by replacing with software managed services | Focus on the mission while leverage turn-key externally managed solution | Platform/Framework – N/A Programming Language – N/A Applications/Dependencies – N/A Configuration/Metadata – N/A Data Store/Containers – No Change and/or Convert |

| Re-architecting (IaaS w/ all cloud characteristics) | Enhancing the application in the cloud to support on- demand model | Leverage cloud characteristics such as elasticity, resiliency, and scalability | Platform/Framework – No Change Programming Language – No Change Applications/Dependencies – Change Configuration/Metadata – Change Data Store/Containers – No Change and/or Convert |

| Re-building (PaaS) | Replacing legacy siloed platforms with modern framework such as Java., .NET, Windows Azure, Salesforce, Cloud Foundry, etc. |

Develop and deploy simple applications into productions more rapidly by leveraging the provider’s templates, data model, and other pre-built components. | Platform/Framework – New Programming Language – No Change and/or New Applications/Dependencies – New Configuration/Metadata – New Data Store/Containers – No Change and/or Convert |

Figure 2.0 – Cloud Transformation Options

Security Controls

Transforming the application across the relevant stack and ensuring it’s functional and meets performance requirements in the cloud can be a huge undertaking. Along with this transformation, it is an opportunity to practice secure coding best practices and embed the appropriate security controls into the development of the application. Much like the Department of Defense (DoD) information assurance concept for defense in depth, the application stack must employ a similar concept to ensure security is in place for cloud services and the applications that leverage such services. For Federal consumers of cloud services, the CSP must be compliant to the Federal Risk and Authorization Management Program (FedRAMP) security controls and the agency specific controls. For DoD mission owners, FedRAMP, Defense Information Systems Agency (DISA)’s Cloud Computing Security Requirements Guide (CC SRG) authorization and additional agency specific controls are required before operational data can be hosted in the cloud.

DoD mission owners should use FedRAMP and DISA evaluations in a reciprocity-based fashion by leveraging the assessment to dramatically speed up the agency specific authorization process while focusing at the higher layer of the application stack where the security controls have not been independently assessed and authorized. For example, Amazon Web Services (AWS) and Microsoft Azure are two of the largest CSPs. As a CSP that has already been authorized by the DoD, each CSP is required to undergo assessment against the FedRAMP+ controls established in the CC SRG. Both AWS and AZURE have completed this assessment and has received up to

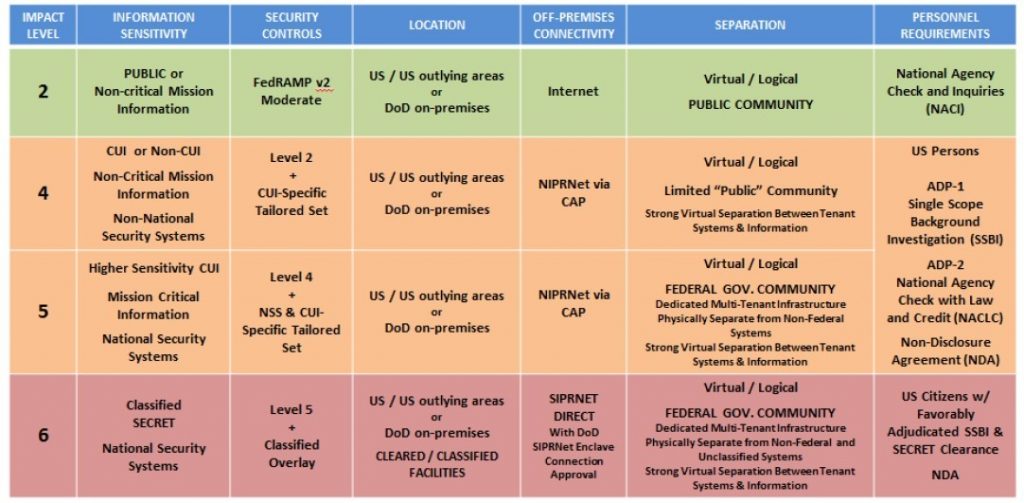

a full Impact Level 5 (see Figure 3.0) Provisional Authorization allowing mission owners to migrate production workloads including: Export Controlled Data, Privacy Information, Protected Health Information, explicit Controlled Unclassified Information designation: For Official Use Only, Official Use Only, Law Enforcement Sensitive, Critical Infrastructure Information, Sensitive Security Information.

Figure 3.0 – CC SRG Impact Levels (reference DISA CC SRG V1R2)

More information can be found in the CC SRG, but it is important to note that at this time:

- Impact Level 2 (IL2) data can be directly accessible from the Internet and does not require passing through the Internet Access Points (IAPs) or the mission owner Cloud Access Points (CAPs). This would mean less visibility to those cloud servers and less access controls to those servers is

- Segmentation is required for ILs (e.g., IL2 and IL4 servers or applications must be logically separated from each other, which prevents the easy lateral movement of adversaries when the lower level system is compromised. IL2 does not require as strong access controls. If segmentation is not possible, a mitigation plan is to treat IL2 like IL4 with the same level of security controls

- Personally identifiable information (PII) and Public Health Information (PHI) data are categorized as CUI and must be minimally stored and processed at IL4 CSPs. The CSPs can be Public or Government Community, as long as the Risk Management Framework controls are implemented. Encryption must be

Use Case – Securing Databases

Start with a known good equivalent baseline for all three environments: development, testing and operations. Be aware that there will be a tendency to drift away from this baseline due to conflicting need for security and function to allow access to the system from both internal and external users or machines. The goal is to not generate too many exceptions required to handle application limitations. Today’s strong security profile will decay unless constant or periodic modifications are made to the baseline in order to keep the system secure and up-to-date. Each deviation from the intended/architected security posture requires careful documentation, approval of a configuration control board, determination of risk and the acceptance of that risk.

Security recommendations for the internal and external connected resources are to limit direct database access whenever possible. It is much harder to hack databases if attacker cannot connect. Use firewalls in front of data center (on-premise or Cloud), network Access Control Lists, TNS invited nodes, Oracle Connection Manager, Oracle Database Firewall, etc. DBAs should use bastion hosts to manage databases.

PeopleSoft Database Security Controls for Special accounts must be implemented. Application developers must be aware of these accounts and not assume that the defaults can be used. This will prevent the loss of time and money to modify code as the project moves through the various cycles of develop, test, and deploy to operational status. In order to ensure a secure deployment, the following must be implemented:

- Secure PeopleSoft database passwords for the secure key accounts: Connect Id, Access Id, IB and PS. These should be changed regularly. Change defaults passwords. Password should never equal username or be shared

- Default tablespace should never be ‘SYSTEM’ – Never for Connect ID – Only SYS and SYSTEM should use the SYSTEM tablespace

- Encrypt SYSADM password by using the password admin utility, “psadminutility’ to encrypt passwords in configuration files

- Ensure “EnableDBMonitoring” is ALWAYS enabled – Enabled by Default (psappssrv.cfg)Populates client_infowith user, IP address and program name

- One PeopleSoft database per Oracle RDBMS instance – Production must be exclusive – No demo databases for production

- User tablespaces should never use PSDEFAULT – This is reserve for application use only

- Do not use SYSADM for day-to-day support – Use named accounts

- Create Fewer Insiders with PasswordTo further increase the security of PeopleSoft as it moves through the life cycle, don’t share passwords between production and non-production. Rotate passwords regularly and constantly check for weak and default passwords. Don’t forget about Oracle database default accounts and don’t use the same password for all these special case accounts.Generate and use Database Audit Data to provide visibility of PeopleSoft and Oracle Databases as part of continuous monitoring. Database auditing in most organizations done simply for a compliance checkbox. Auditing of cloud hosted databases are too often overlooked (out of sight, out of mind) or the assumption is either the CSP will do it automatically (and for free) for your cloud deployed system(s). This leads to a state where auditing is poorly defined, no review of audit data and there is no mapping of mission requirements to auditing, alerts, or reports. This renders zero value to the organization, system manager, operators and defenders. Intelligent and mission-focused auditing and monitoring using the PeopleSoft capabilities to transform audit data into actionable information, using auditing as mitigating control when necessary to proactively identify non-compliance and solve compliance and security challenges.Use Encryption to protect the confidentiality of data-in-use, data-at-rest and data-in-motion. But don’t be guilty of harboring misconceptions about Database Storage Encryption. Encryption is not an access control tool. Encryption does not solve access control problems. Data is encrypted the same regardless of user (good guys and bad guys alike). Encryption will not provide malicious employee protection nor protect against malicious privileged employees and contractors. After all, DBAs have full access by role alone. Key management determines success of encryptions, so you and only you can should control the keys. And there is a cost to pay for encryptions, performance, so we can’t encrypt everything.PeopleSoft and Oracle are Public Key Enabled and through the use of servlets and provisioned scripts, DoD Public Key Infrastructure smartcard and Common Access Card can be used to enable secure transport of information whether end-user via web page or portal, to machine to machine interfaces compliance with DoD strong authentication is readily supported. PeopleSoft’s Transparent database encryption requires no application code or database structure changes to implement and provides data-at-rest. Transparent Data Encryption provides data protection if disk drive, database file, backup tape of the database files is stolen or lost.

Use Case -Migration Approach with a Focus on Authoritative Data Sources

Throughout the federal space, to include the DoD, there is a need for Authoritative Data Sources (ADSs) to provide access to current, reliable and trusted data. This enables independent systems to confidently consume or publish data to other systems and to support the shift to net-centric operations. To achieve ADSs, some form of data transformation is required to ensure high-quality data is shared. Transformation of the data, better known as Extract, Transform and Load (ETL), is the process to collect, clean, summarize data from various sources, integrate and aggregate the data via business rules and needs. The ETL process can be performed prior to migrating the transformed data to the destination target such as the cloud, or it can be performed directly in the cloud.

Many ETL platforms perform this process on the on-premise operational data source and the transformed data source or ADSs can be migrated to the cloud. There are some CSPs that enable ETL in the cloud to simplify and expedite the storage, process and transformation of data. This ETL process optimizes costs because resource utilization can be scaled up or down as needed. Depending on the CSP, the ETL platform can be third-party software deployed in an IaaS configuration or in a native PaaS tool configuration.

Whether ETL is done in the cloud (simple relationship – merge) or on-premise (complex relationship with multiple data sources), the data transformation approach consists of four phases

- Data Cleansing/Normalization – Detecting, correcting incomplete, inaccurate and irrelevant records to improve the quality of data to determine what is the

- Data Integration – Standardize data definitions and structure of multiple data sources by using a common schema to achieve a common view of the data for enterprise

- Data Aggregation/Consolidation – Gather and package data from two or more data characteristics to derive value that is useful for reporting and statically analysis

- Data Migration – Introduction of a new system, such as transferring ADSs to the Cloud to maximize value

Use Case – Securing Databases

The cloud is a new platform that enables increased agility at lower operational costs. Depending on the business value an organization wants to extract out of migration to the cloud versus the transformation costs, there is an optimal approach to choose from given that the application has been assessed to be cloud ready. Migrating an application and its associated data, regardless if it’s categorized as an ADS or just a regular application on an Oracle platform, the high-level approach is the same but migration plan must be detailed out from the “as-is” to the “to-be” environment.